A portrait of Walter Carlos, composer, musician, physicist, technician and genius, sits at a keyboard and the synthesizer he created. Walter later became Wendy Carlos, she helped in the development of the Moog synthesizer, Robert Moog’s first commercially available keyboard instrument.

The Past: When Machines First Entered Music

Long before artificial intelligence, music was already a dialogue between humans and machines.

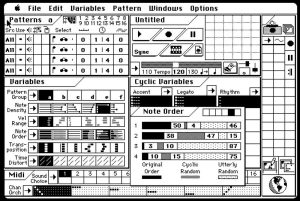

The earliest encounters were mechanical: music boxes, player pianos, and automata transformed rhythm and melody into repeatable processes. These devices did not understand music, but they introduced a radical idea—that sound could be encoded, stored, and reproduced by non-human systems.

The 20th century pushed this further. Magnetic tape, synthesizers, and early digital instruments turned music into data. Composition slowly shifted from pure performance to system design. By the late 1970s, artists such as Kraftwerkembraced machines not as accessories, but as integral creative partners. Technology was no longer hidden backstage; it was on stage.

Later, composers like Brian Eno explored generative music—works defined by rules and probabilities rather than fixed scores. This was a quiet but profound transition: music became something that could emerge, not just be written.

In hindsight, these experiments anticipated artificial intelligence. They framed creativity as a space where constraints, randomness, and structure coexist.

The Present: AI as a Creative System

Over the past decade, AI has moved decisively into music. What changed was not only computing power, but approach.

Instead of hard-coded rules, machine learning systems began to learn directly from vast musical corpora. Harmony, rhythm, texture, and style became statistical patterns—internalized rather than prescribed.

Today, AI is used to:

- Assist composition and arrangement

- Generate adaptive and interactive soundscapes

- Support mastering, restoration, and sound design

- Enable new creative workflows for professionals and amateurs alike

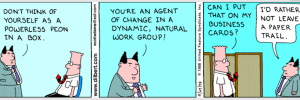

Yet the most important shift is conceptual. AI in music is no longer just about automation. It is about augmentation.

The human role has not disappeared—it has changed. Creativity now lies in framing prompts, curating outputs, setting constraints, and shaping intent. Music creation increasingly resembles orchestration: many elements, human and artificial, working together in time.

What We Can Expect: 2026 and Beyond

Looking ahead, the convergence of AI and music points toward deeper transformations that go well beyond novelty.

From static works to living systems

Music will increasingly adapt in real time—to listeners, environments, and contexts. Soundtracks will no longer be fixed artifacts, but responsive systems.

From individual authorship to co-creation

The question will shift from “Who composed this?” to “How did this come into being?” Creativity will be distributed across humans, models, platforms, and communities.

From tools to ecosystems

Just as digital innovation now happens in interconnected ecosystems, creative AI will evolve through shared models, open standards, and collaborative experimentation rather than isolated products.

From efficiency to meaning

Automation alone is not the destination. The next frontier is expressiveness: systems that can support nuance, emotion, and cultural depth—not by replacing human sensitivity, but by amplifying it.

A Closing Reflection

Music has often been a preview of technological futures.

We trusted machines with sound long before we trusted them with decisions.

As we move into 2026, AI and music together offer a useful reminder:

progress is not only about speed or power, but about harmony.

The challenge ahead is not whether machines can create music, but whether we can design systems – technical and social – that allow human creativity to resonate more deeply, not more loudly.

… and A Personal Note

For me, this convergence between AI and music is not theoretical.

I grew up in a time when technology was tactile and noisy: tapes spinning, terminals humming, synthesizers warming up, early networks struggling to connect cities and people. Later came software, mobile networks, platforms, ecosystems- and now AI. Across decades, one pattern has remained constant: technology only matters when it amplifies human expression.

I have seen systems built purely for efficiency fail, and others – designed with culture, people, and timing in mind -endure. Music has always been my quiet reference point in this journey. It taught me early that structure without emotion is sterile, and creativity without discipline is noise.

As we enter 2026, I look at AI the same way I once looked at early digital tools: not as something to fear or glorify, but as something to tune. Like any instrument, its value depends on how we play it, who we play it with, and why.

My hope is that we use this new wave of intelligence not to replace the human voice, but to help it travel further – more clearly, more honestly, and in better harmony with others.

Because in the end, progress, like music, is never a solo.